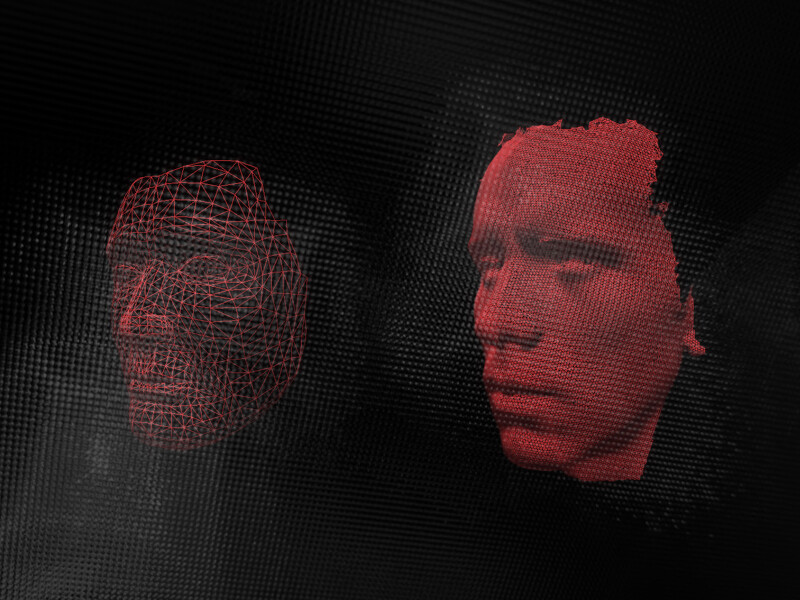

Is it possible to deceive facial recognition? What is the cost of the technology and its maintenance? How can I protect my personal data? Are banks prepared for deepfake attacks?

Hello, this is the Kotelov digital finance podcast. We invite experts from the field of financial development and discuss complex technical matters in simple terms

Joining us in the studio is Alexander Parkin, the head of Vision Labs, an expert in computer vision. Technologies that have passed through his hands include facial recognition for subway payments and biometric unlocking of ATMs at Sberbank.

Alexander’s work revolves around preventing fraudsters from bypassing turnstiles or entering the banking system by using someone else’s face.

Today, we will talk about facial recognition systems in the financial sector, how fraudsters deceive banking systems, and how regular individuals can protect themselves.

Biometric Security

What does facial recognition and deepfakes have to do with an ordinary person like John Smith?

Ten years ago, no one had an account on government services, and now everyone unlocks their mobile app with FaceID. Already, you can remotely open a bank account or sign up for a cryptocurrency exchange using biometrics.

Banks that have not yet adopted biometrics work significantly slower because the process looks like this:

The customer calls the bank → waits for an operator → the operator connects and asks them to show their passport via video call → verifies additional information → all of this takes around 15 minutes.

Implementing biometrics significantly optimizes the process but increases the risk of fraud through deepfakes.

How much does facial recognition cost?

When looking at the entire process, the most demanding part in this pipeline is facial recognition. However, on a CPU or a phone, it takes about 0.2 seconds, nearly instantaneous. The issue lies in the fact that phones come in various models. To ensure that the request execution time is not device-dependent, the request is sent to a server, where it is processed. In other words, there is added time for sending the image to the server, then 0.2 seconds for processing, and delivering it back.

What products are available for recognition?

1.SDK

A simple set of models where you just need to send photos with specific requests. Requests can be like “face recognition” or “age determination from a face.

2. Platform with Additional Customization

This product is provided to customers and becomes fully autonomous. It is customized for specific business tasks, such as identifying whether a person is in a specific internal company database based on a photo.

In this scenario, the client pays for each recognition.

💡 Example

A terminal is provided to cafes. It can accept card payments and utilize facial recognition for transactions. Registration is required for facial recognition, and payment is made for each recognition as a separate request.

In this way, businesses set up a loyalty system not based on a card, which can be forgotten or lost, and not even based on a phone number, which can be time-consuming to input. Loyalty is established through biometrics in those same 0.2 seconds.

How safe is it to provide your biometrics?

Even major companies experience data breaches from time to time. Is it worth handing over your biometric data for the sake of fleeting convenience in payment?

To understand how secure these data are, let’s delve into the process of collecting biometric data. This process is called matrix multiplication.

Matrix Multiplication

- We obtain the image for the first time → the input is an image → the output is a vector of real numbers.

- We obtain the image for the second time → the output is another n-dimensional vector → if the numerical distance between two vectors is small, it means that the faces belong to the same person.

- The generated hash is then sent to the database, where a secure contract is stored: last name, first name, middle name, and this so-called descriptor.

In other words, the database does not store your photograph but a code. If someone manages to steal this data, they won’t be able to convert this vector back into an image.

💡 Example

John sends a photograph, it gets transformed into a vector, and is stored in the database. When John authorizes himself next time, the system uses the second vector to confirm his identity. However, if a hacker steals the database, they’ll obtain thousands and millions of vectors that they won’t be able to use in any way.

As a result, the system is secure. However, companies may, for their own reasons, choose to store photographs. For instance, they might want to update all the vectors and make them more accurate when updating the product. To do that, they need to store photographs in the database.

How to protect your personal data? I don’t want to be recognized.

In other words, what can you do to prevent your biometric data from being copied?

Unfortunately, the answer is both simple and complex at the same time – you should avoid getting in front of any cameras. You can leave your photos somewhere and then delete them. For instance, on a social media profile like Facebook. However, a company can claim to have deleted them but secretly store your personal data in their database, and you won’t be able to know for sure

The only option left is to seek these data through legal means from everyone you’ve ever had contact with.

💡 But:

There is an option to introduce additional noise, which slightly distorts the photograph. We even held competitions within VisionLabs: participants had to add a small amount of noise to image 1 in order to minimize the distance between vectors and make the algorithm recognize the images as similar, despite the noise.

How do fraudsters deceive recognition systems?

There are three standard objectives that fraudsters aim to achieve:

- The image remains unchanged for a physical person, but changes dramatically for the neural network.

- The person realizes that someone else is shown in the image, but the neural network remains unsuspecting. This occurs in the physical world, meaning when we hold a camera phone in front of us. We don’t have access to the channel between the camera and the neural network.

Deepfakes: Deception of both the neural network and the person simultaneously. In this case, we have access to the channel between the camera and the neural network.

For a long time, there was a myth about a particular sweater that the detector seemingly couldn’t detect. However, when we tested it with our program, everything worked fine – the person was still detected. The issue isn’t that we have a special detector; it takes significant preparation to deceive a specific neural network

To even virtually deceive a specific neural network, it takes numerous attempts. This means you need to tailor your approach to the architecture and model of the neural network.

If the same deepfake were to be assessed by a different neural network, it’s likely that the deception would be immediately detected. It’s not just about the physical aspects, where lighting may not be ideal, the camera can become dirty, and many other factors that could undermine any deception

In summary: there is no way to protect yourself from facial recognition, neither on social media nor in public spaces. The only method is using infrared spoofing.

This means wearing infrared bands or glasses that are turned on. Ordinary people won’t notice this, but for cameras and recognition systems, you will shine brightly, interfering with recognition.

How else can a person be recognized?

Currently, it is almost impossible to recognize a person if their face is not visible. The most critical part is the eyes and the surrounding area. So, even when wearing a hijab, neural networks can recognize you.

But recognition through your gait, silhouette, or something similar is not feasible at this time.

But there is one caveat:

💡 Imagine a scenario like in movies about special agents.

A person was walking in green pants and a blue sweater – the camera recognized them. Then they covered their entire face, but based on the same clothing, they could still be recognized. It’s possible to do tracking between different cameras if there’s a short time gap between recognitions.

However, if you enter a crowd of people who look similarly striking – recognizing that person becomes impossible.

Banks are not ready to protect their clients’ money

Currently, businesses’ requests for security are not keeping pace with the tools used by fraudsters. In other words, frauds involving deepfakes are already happening, but banks do not see it as a global problem that needs urgent funding and development.

We decided to delve deeper into the topic of deepfakes and wrote a separate article on this subject – “Deepfakes: Evil or Good?”. We analyze it with a recognition and computer vision specialist.

If you want to get more interesting IT-news, fresh job prospects & insightful content, check out our LinkedIn page!